Abstract

The binding between proteins and ligands plays a crucial role in the realm of drug discovery. Previous deep learning approaches have shown promising results over traditional computationally intensive methods, but resulting in poor generalization due to limited supervised data. In this paper, we propose to learn protein-ligand binding representation in a self-supervised learning manner. Different from existing pre-training approaches which treat proteins and ligands individually, we emphasize to discern the intricate binding patterns from fine-grained interactions. Specifically, this self-supervised learning problem is formulated as a prediction of the conclusive binding complex structure given a pocket and ligand with a Transformer based interaction module, which naturally emulates the binding process. To ensure the representation of rich binding information, we introduce two pre-training tasks, i.e. atomic pairwise distance map prediction and mask ligand reconstruction, which comprehensively model the fine-grained interactions from both structure and feature space. Extensive experiments have demonstrated the superiority of our method across various binding tasks, including protein-ligand affinity prediction, virtual screening and protein-ligand docking.

Method

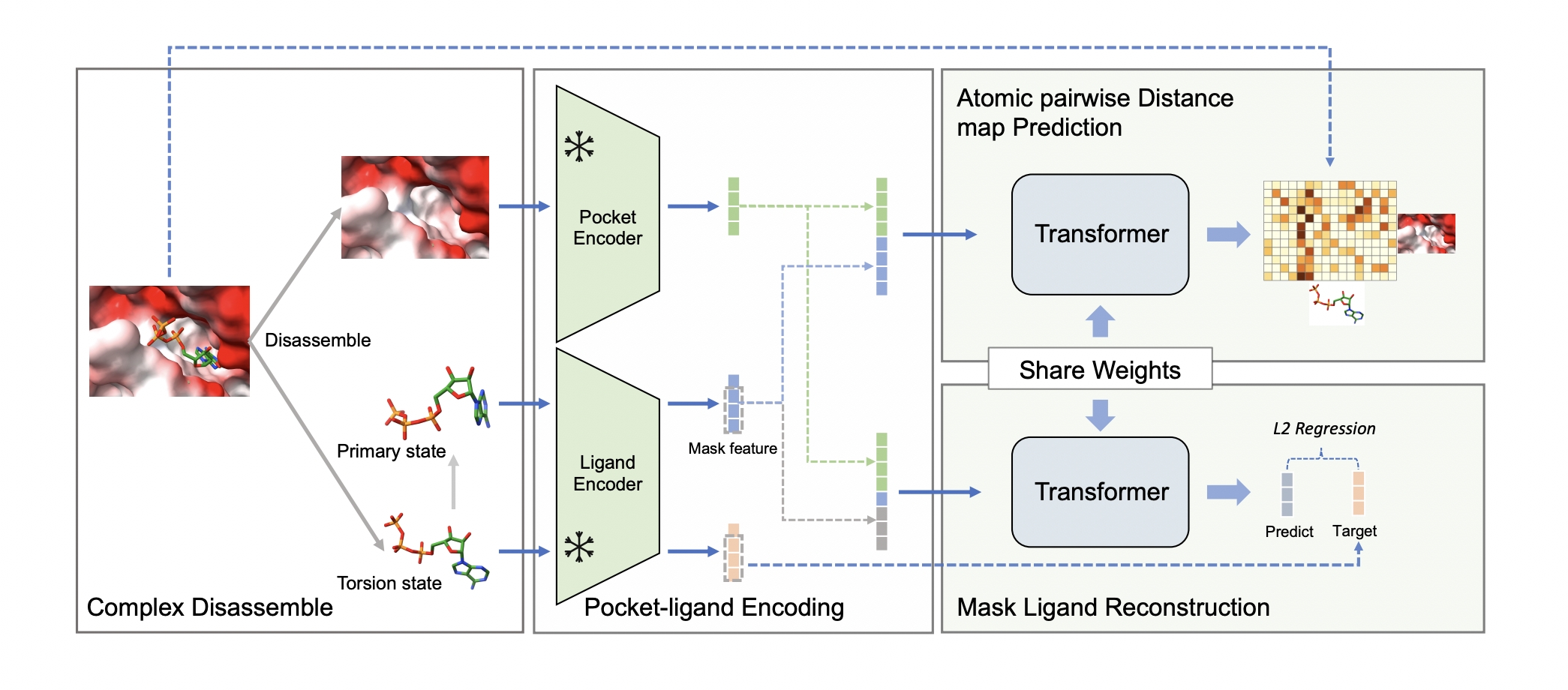

We propose to learn protein-ligand binding representations from fine-grained interactions, named BindNet. Specifically, our self-supervised learning problem involves predicting the conclusive binding complex structure given a primary pocket and ligand, which is in line with the protein-ligand interaction process. To emphasize learning fundamental interactions, we employ a specific Transformer-based interaction module that utilizes individual pocket and ligand encoders in the modeling process. To ensure that the model learns interaction-aware protein and ligand representations, we use two distinct strategies in the pre-training process. The first pre-training objective is Atomic pairwise Distance map Prediction (ADP), where interaction distance map between atoms in the protein and ligand is employed to provide detailed supervision signals regarding their interactions. The other pre-training objective is Mask Ligand Reconstruction (MLR), in which the ligand representation extracted by a 3D pre-trained encoder is masked for reconstruction. By employing feature space masking and reconstruction instead of simply token or atom type masking, the model is more likely to capture richer semantic information, such as chemical and shape information, during the pre-training process.

Experiments

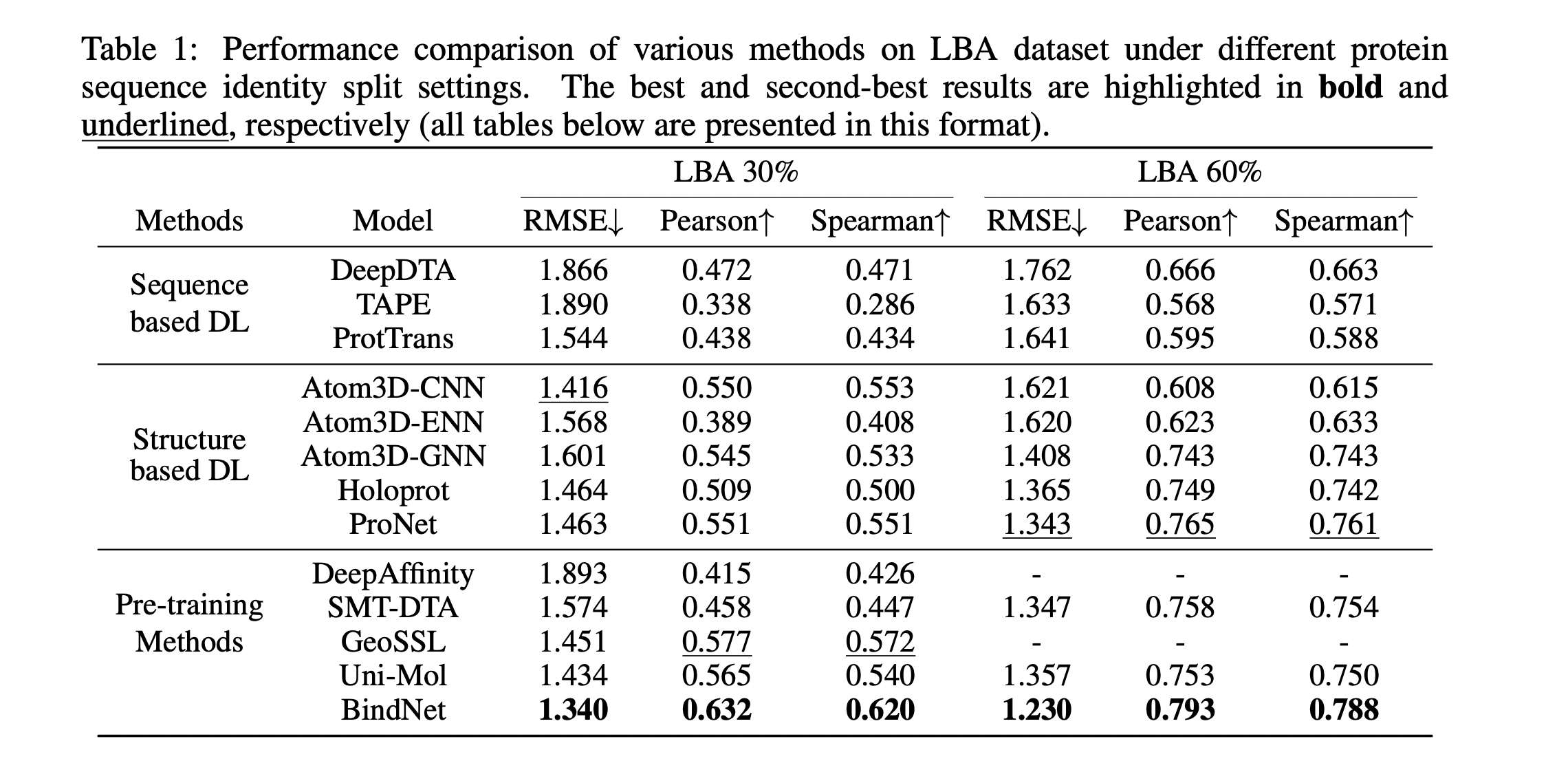

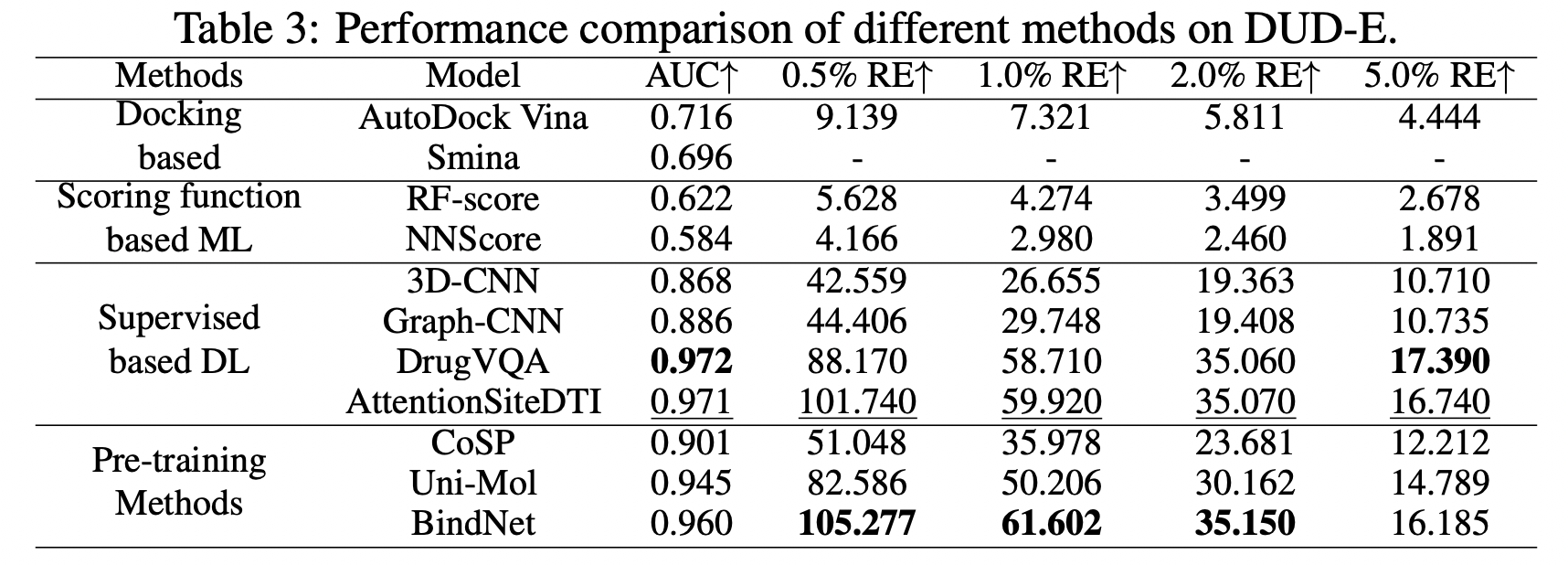

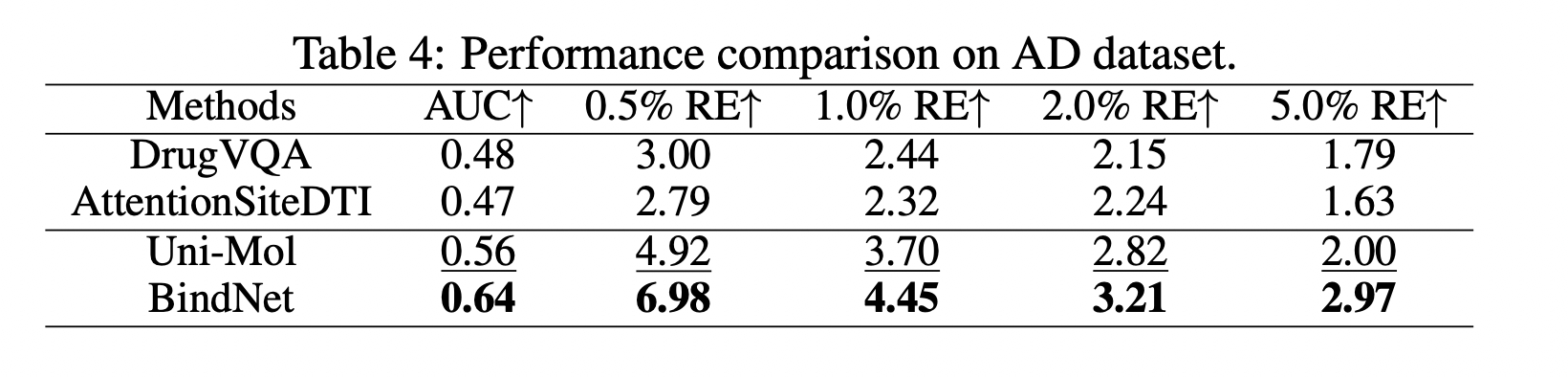

Extensive experiments have demonstrated the superiority of our method across various binding tasks, including protein-ligand affinity prediction, virtual screening and protein-ligand docking.